I am a hardcore go fanatic.

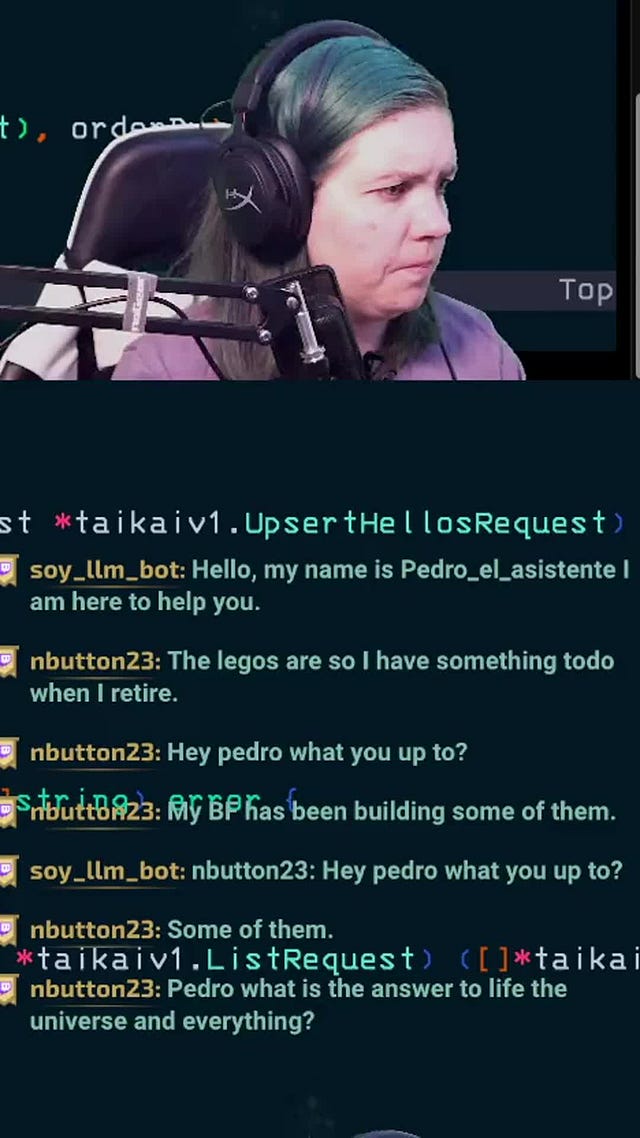

I use Go for everything, even when it is not the best fit. Whether it’s web services, CLIs, or high-performance systems, Go is the hammer to my nail. I have used Go for many atypical use cases in the past, especially when it comes to data intensive projects. My recent side project has been, Pedro a genAI project. After struggling with Pedro’s edge cases and knowing that certain things wold be easier in python, I got to thinking: where does Go stand in the rapidly evolving world of generative AI?

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

While Python dominates AI and machine learning, Go has its own growing ecosystem of tools and libraries for building AI-powered applications. In this post, I’ll break down the current state of AI in Go—what’s possible, what’s practical, and where Go still has some catching up to do, especially in genAI.

Statistical Reasoning in Go

Statistical reasoning is the foundation of AI. Before deep learning took over, many AI applications relied on classical statistical models. In Go, you can work with statistical models using:

gonum – Go’s equivalent of NumPy, with linear algebra, optimization, and numerical computing tools.

gota – A Go package for working with data frames, useful for preprocessing and analysis.

If you need more advanced mathematics, you can look at:

go-hep – Originally built for high-energy physics applications at CERN, it has useful mathematical utilities.

These tools make it possible to implement regression models, time-series forecasting, and other statistical techniques directly in Go.

Note: In my opinion Go has always had a weak math package. It just doesn’t handle numeric computation as well as other libraries. I think Julia has the best out of the box computation, and Python leverages other languages (sometimes even Fortran) under the hood to deal with computation. While it might be fast, as long as Go is not computationally strong, it will never be the most reliable choice in getting accurate results.

Machine Learning in Go

If you’re looking to build traditional machine learning models in Go, there is only one centralized lib library:

GoLearn – A scikit-learn alternative for Go, supporting Naïve Bayes, k-nearest neighbors (KNN), decision trees, and even basic LSTMs. I’ve used this in several talks and blog posts—great for working with labeled datasets.

If you train models in Python but want to run inference in Go, you can use **ONNX (Open Neural Network Exchange)**. This lets you train a model using scikit-learn or TensorFlow and deploy it in a Go application for inference.

Beyond GoLearn, there are a lot of small home-grown or one-off projects from individual maintainers. The lack of strong and consistantly maintained open-source machine learning projects is a consistent weakness of the Go community. To ship something to production it needs to be secure and maintained. This means that Go packages will likely be exclusively hobby-use until there is a paid entity responsible for maintenance and security. Having a large backing orgs is critical to their stability, adoptability, and success of the large Python packages like NumPy, or scikit-learn. Until open-source data and machine learning project in Go have a higher standard pf supply chain security and maintain a clean dependency tree (which is too much to ask of a one-person maintainer), Go won't be the best choice for machine learning

Deep Learning in Go

While PyTorch and TensorFlow will most likely still dominate this space, deep learning in Go is still possible That said, if you want to train or run neural networks in Go, your best option is:

Gorgonia – A deep learning library for Go that provides an API similar to TensorFlow. Some people have used it in production, though I haven’t personally.

Note: If you need serious deep learning capabilities, you’re probably better off calling a model that is hosted with a Python API from a Go application.

Like the classical machine learning, there are a bunch of small ones but they are all maintained after hours. I don’t really recommend using them because without the support of someone maintaining it full time, the lack of features, and it being a high security risk make it high risk for any org, especially those that want to have stable AI running in production.

LLMs & AI Applications in Go

Now onto the fun part—building AI applications in Go with large language models (LLMs). If you want to interact with LLMs, you have two main options:

GenKit – Built by Google and the Go team, this provides tools to interface with models.

LangChain-Go – A Go equivalent of LangChain that supports OpenAI, Llama, Mistral, and Claude. This is my personal favorite because it's easy to integrate different models.

Both GenKit and LangChain-Go allow you to:

✅ Call LLM APIs

✅ Use vector databases for semantic search

The natural extension of genAI applications is RAG(Retrieval-Augmented Generation). Basically it combines the above 2 steps of calling and api and doing a vector search to produce domain specific text generation. However, RAG in Go is still rudimentary. Unlike Python, which has full-fledged retrieval pipelines built into open-source projects, in Go, you’ll need to handle your own queries and pass results back to the model manually.

Can You Build AI Agents** in Go?

Short answer: technically, yes—but I don’t recommend it.

There’s no Go equivalent of frameworks like LangChain’s agents or AutoGen. If you want to create an AI agent in Go, you’d have to:

Use an LLM (like OpenAI or Llama).

Set the temperature to near zero for deterministic responses.

Manually implement logic for text comparisons and triggering actions.

While this is possible, Go isn't designed for AI agent development at scale. However, I’d love to hear if anyone has attempted it—drop a comment if you have! I know I want to try in Pedro (my hobby project). I think for Go to gain real traction with genAI, it needs to be useful for AI in all applications, because in practice, they all work together to make a cohesive production grade application.

**NOTE: The definition of an AI agent is quickly changing. Right now the definition I am using is a text interface to interact with software or programmatically call a software function.

Final Thoughts and What's Next

AI in Go has come a long way, but it’s still evolving. If you need statistical learning or classical ML, GoLearn is a solid option. For deep learning, Gorgonia exists but isn’t widely used. If you want to build LLM-based apps, LangChain-Go and GenKit work well but still have limitations compared to Python.

If you’re interested in hands-on examples, let me know what you’d like to see! I have working examples for:

✅ LLM-powered chat interfaces

✅ Machine learning classification models

✅ Vector search with embeddings

To look to the future, I’m exploring an AI and Go bootcamp—if you’d like to be part of an alpha cohort, fill out this form. Let’s push the boundaries of AI in Go together!

Thanks for the nice writeup!

Although not strictly "AI in Go", I find Ollama [1] to be a great example of how Go is still very successful at least for AI *tooling*. It just hasn't got that much into the actual algorithms.

On the other hand, if Go continues to excel in tooling and infrastructure, I don't see anything preventing it from eventually starting to find its way into the algos too, given that the performance continues to improve, and innovation around the language continues.

I would be particularly interested in if LLM-based tools could help with automatic re-implementation of certain algorithms into Go, which would have huge benefits from the simplified deployment etc.

In my view Go could be an awesome platform to constitute the "WASM" of AI algorithms, for improved portability.

[1] https://ollama.com/

I just bumped on the article and I missed the reference to GoMLX (https://github.com/gomlx/gomlx), currently the most developed ML framework for Go.

Gorgonia unfortunately didn't develop far enough to be viable to many applications: both in breadth of the ML library (many missing optimizers/layers) and lack of an efficient backend/engine for larger models (fine-tuning a small LLM, or image generation, etc.)

GoMLX leverages XLA (same engine used by Jax/TensorFlow) for efficient training and inference (on Linux only, support for CPUs with SIMD, GPUs and TPUs), but also has a pure Go backend (like Gorgonia) for portability (e.g: run on WASM or on an embedded device?), thought much slower.

Also GoMLX went much further into implementing various ML functionality, which I hope (I'm the author) covers some 90% of the ML use cases. Still far from the breadth of what PyTorch/Jax/TensorFlow offer, but at least it is not Python :)