Why GenAI Infrastructure Feels Backwards

And How Go Could Fix It

Tl;dr

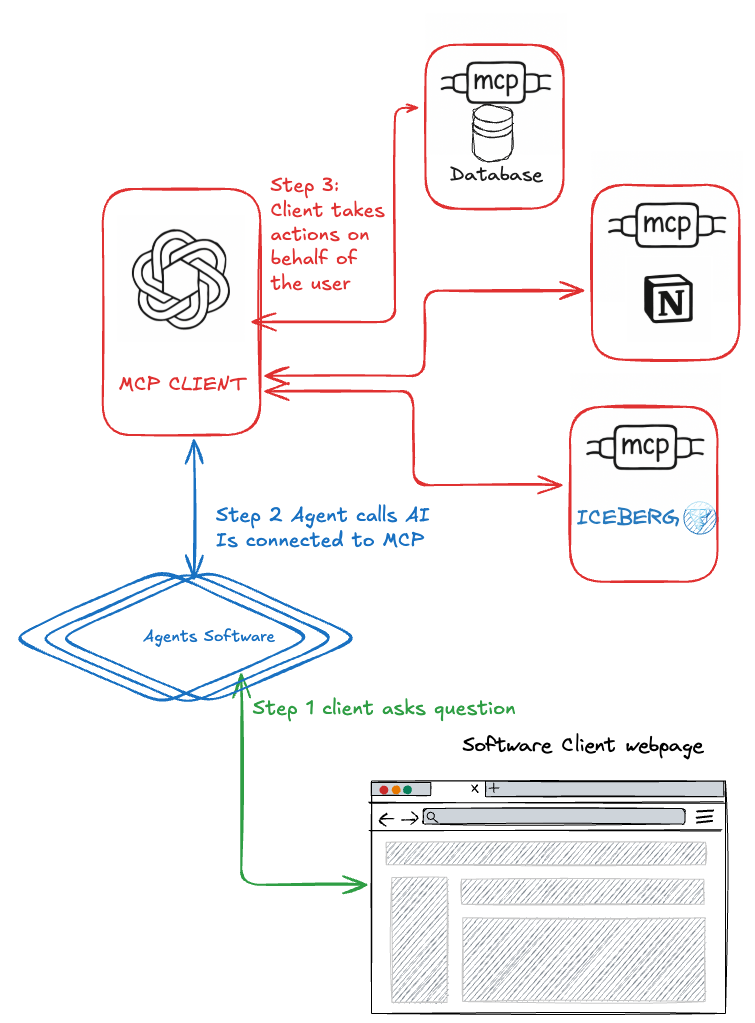

Today’s GenAI tools are built for client-facing products. That’s fine—they’re driving value fast. But this has led to inverted architectures (like MCP) where models are treated as clients and UIs as servers. But this has led to inverted architectures (like MCP) where models are treated as clients and UIs as servers.

Eventually, we’ll need server-side agents—reliable, concurrent, infrastructure-first components.Go is poorly suited for training, but ideal for running these agents at scale. I believe the long bet is on Go, Rust, and Zig powering GenAI infra—not just Python and TypeScript.

The GenAI ecosystem feels backwards to me.

I don’t mean that cynically or dismissively—there are a ton of smart engineers building high-impact products in the generative AI space. But if you come from a systems background, you can’t help but feel like the current architecture is missing something. My current deduction is we’re building most of this stack from the client down rather than the infrastructure up. This is great for prototypes, but definitely breaks when it comes time to scale.

How We Got Here

AI has essentially forced us to reimagine everything we knew about software. Models are changing and improving so fast that we can’t keep up. In times like these (think dot-com boom or mobile app revolution), the people who are not weighed down by old paradigms and patterns drive innovation. This means that the people building the coolest AI tools right now—the real game changing ones—have the least experience with real production outages, security breaches, etc. Because it's the easiest way to build and get started with software, they often are front-end focused, and they look for tools and frameworks to fill in their experience gaps.

Look at the most popular tools in the GenAI space right now—LangChain, Langfuse, OpenAI’s SDKs, HumanLoop, and every chatbot starter repo out there. Nearly all of them assume you’re building from the front end. JavaScript and Python dominate, and the first few tutorials usually live inside a Next.js app or a Jupyter notebook. Thus, w

e see, that’s where the developer momentum is!

Tools like LangChain and Langfuse are optimized for velocity. You can wire up a prototype in minutes, test user behavior in real time, and deploy something customer-facing long before you worry about reliability or throughput. That’s a powerful thing. It allows for creativity from the next generation of engineers. Yes, I think I could do the same things these platforms do by just writing APIs and Prometheus metrics, but that doesn’t mean it's always the answer. These tools aren’t “wrong”—they’re just optimized for early product-market fit by those who can get it.

And I want to say this clearly: I respect that. I’m not here to dunk on front-end focused work. Honestly, it’s hard. Building interfaces that work, scale, and make sense to users is a real craft. My bias is toward backends and data-intensive systems, but I know where value is generated: customer interaction. That’s where the money starts flowing, and one day, when these systems need to scale, I will be ready to jump in and help.

When I Saw TypeScript in MCP, It All Clicked

A few weeks ago, I was reading the Model Context Protocol (MCP) documentation because I wanted to make Pedro (my Discord/Twitch AI bot) more modular and intelligent. MCP is a spec that describes how models as clients can interact in multi-agent workflows and multiple data stores. Right now, everyone says, "just put an MCP server in front of your database and let Claude access it." (Don’t do that, by the way. The only thing that should read from a production database is a production software application. It is basic governance.) It’s still early, but it’s promising.

What caught me off guard was that the official implementation examples were in Python and TypeScript. I wanted a Go one!* Go makes amazing web calls because of the first-class http package, but it wasn’t there.

*It came out recently, but after I drafted this

I get Python. It’s the lingua franca of AI. But TypeScript? It’s not a language built for math or compute-intensive workloads. It’s not something you’d reach for when building vector stores, doing token math, or streaming outputs from a model. And yet, there it was—used as a “major” language for building AI interactions.

That’s when it hit me. The reason TypeScript is everywhere in GenAI tooling is because the architecture is still fundamentally client-first. Even something as infrastructure-ish* as MCP is modeled from the assumption that the customer-facing app (the UI) is the “server,” and the model—the thing doing the hard compute—is the “client.”

That’s upside down. But it’s also a reflection of where we are.

*MCP is not a good software systems paradigm right now. It is missing some fundamental things:

Access controls. It give too much freedom to the LLM. Basically ChatGPT gets access to all the servers and all the agents and has the freedom to act between them as the “agent” sees fit. There is no garauntee that certain apps only have access to related data stores.

It offloads too much orchestration to ChatGPT. It is the agent engine determining what calls to make and when to make them, making it too hard to predict for production systems. Customers like deterministic behavior; if your software behaves in an unexpected way, that is a bug and causes them to abandon your software.

Basic security, if a data store has PII, who knows when and how that will be leveraged and shared?

I think this deserves a follow-up post.

The Next Era: Server-Side Agents

Right now, we’re building GenAI experiences like web widgets. The model is a thing we ping. The business logic is mostly in the client. The AI tools are just chatbots on a website, rarely as long-running services. And that works—for now.

But the next phase of GenAI is going to demand server-side agents: long-lived, highly available, infrastructure-level components that live close to the models and do more than just relay a prompt. These agents will need to:

Maintain session state over time

Manage multi-turn conversations without leaking memory

Call tools or APIs autonomously

Cache context and vector search results

Handle multiple requests concurrently

Run reliably without human babysitting

In other words, they need to act like real services, not client-side helpers.

I’ve felt this in my own projects. When I started hosting models in my home lab. I am not leveraging OpenAI or Anthropic, so my user experience is 100% homegrown and really bad. I don’t have a good client interface (spoiler: it's Discord), and the server infrastructure is still lacking. Cold starts hurt. Model startup time matters. Logging, tracing, retries—these aren’t nice-to-haves when you’re self-hosting, they’re table stakes.

When you own the GPU, the network, and the uptime, you start thinking very differently. I can’t just spin up Langfuse because it is built for 3rd-party AI. I can’t just use MCP servers cause I would have to build my own client and put it in-between the service and the model. I have to build from the server up instead of the client down, and it breaks the paradigm.

Why Go Could Help Us Get There

As someone who writes a lot of Go and builds backend systems, this feels like skipping a few essential layers. There’s too much emphasis on UX-first wrappers, and not enough on fast, long-lived services that do real work behind the scenes. While that’s okay for now, it’s not the future I think we’re heading toward. Here’s where I come back to Go.

I’m not here to say “rewrite LangChain in Go” (p.s., that is done and I use it); that would be pointless. Go doesn’t have the math ecosystem, the GPU tools (we use Cgo for that), or the momentum to replace Python for model training or experimentation.

But if you’re building a server-side agent—something that calls models, manages pipelines, handles failures, and lives in production—Go is exactly what you want.

Here’s why:

Fast startup and low memory footprint — critical for cold starts and container orchestration

Native concurrency — lets you manage multiple model contexts without resorting to thread pools or async spaghetti

Simplicity and reliability — Go services are easy to reason through and deploy

Static binaries — no Python environment hell, just ship and run

Great ecosystem for observability — native

expvarPrometheus exporters and structured logging

You don’t need Go to train the model. You need it to orchestrate the system around it.

We saw the same thing happen in web development. Prototypes started in PHP or Rails. But when companies needed scale, uptime, and service boundaries, they reached for Go.

I think that’s where GenAI infra is going, too.

Postscript: Why Go Isn’t There Yet

I’ll be honest—Go isn’t present in today’s AI landscape because it’s not the right tool for early experiments:

The math libraries are basic

There’s no deep ecosystem for neural nets

The community energy is focused elsewhere (and rightly so)

Most GenAI toolkits assume you’re in Python or JS

And that’s fine.

Go isn’t going to be the hero of the prototype phase. But once you need fast, stable, composable, and deployable agents? Once your AI needs to run on a box 24/7 with <100ms latency? Go is waiting.

It always has been.